What is Write Amplification (WAF)

Understanding WAF: The Key to Mastering SSD Performance

As I mentioned in [this post], WAF is the most crucial topic to understand to master how SSDs work, and get the most out of your drives.

Write amplification (henceforth just WAF) can happen at different levels of the software stack. For instance, databases have write amplification because there are writes that aren't directly user data but rather writes to move data from one place to another. RocksDB defines WAF for databases:

"Write amplification is the ratio of bytes written to storage versus bytes written to the database."

https://github.com/facebook/rocksdb

In this post, we are explicitly talking about WAF at the SSD level. The SSD receives a certain amount of writes (data) and has to write more than that to put it on the NAND flash due to garbage collection. You can think about WAF as a measurement of the efficiency of garbage collection algorithms and the "system" which is the SSD. The formula for WAF is very simple:

WAF = media writes / host writes

Sometimes you will see it as:

WAF = NAND writes / host writes

Why is write amp bad?

WAF reduces application performance, decreases SSD endurance, and increases power proportionally to the value. Take a simple example:

| WAF | Write Performance (MB/s) | Endurance (TBW) | IOPS & BW / W |

|---|---|---|---|

| 1 | Random write = sequential write | TBW = NAND PE Cycles * Capacity | Baseline |

| 2 | Random write = seq write / 2 | TBW = NAND PE Cycles * Capacity / 2 | Baseline / 2 |

| 5 | Random write = seq write / 5 | TBW = NAND PE Cycles * Capacity / 5 | Baseline / 5 |

Why does WAF happen?

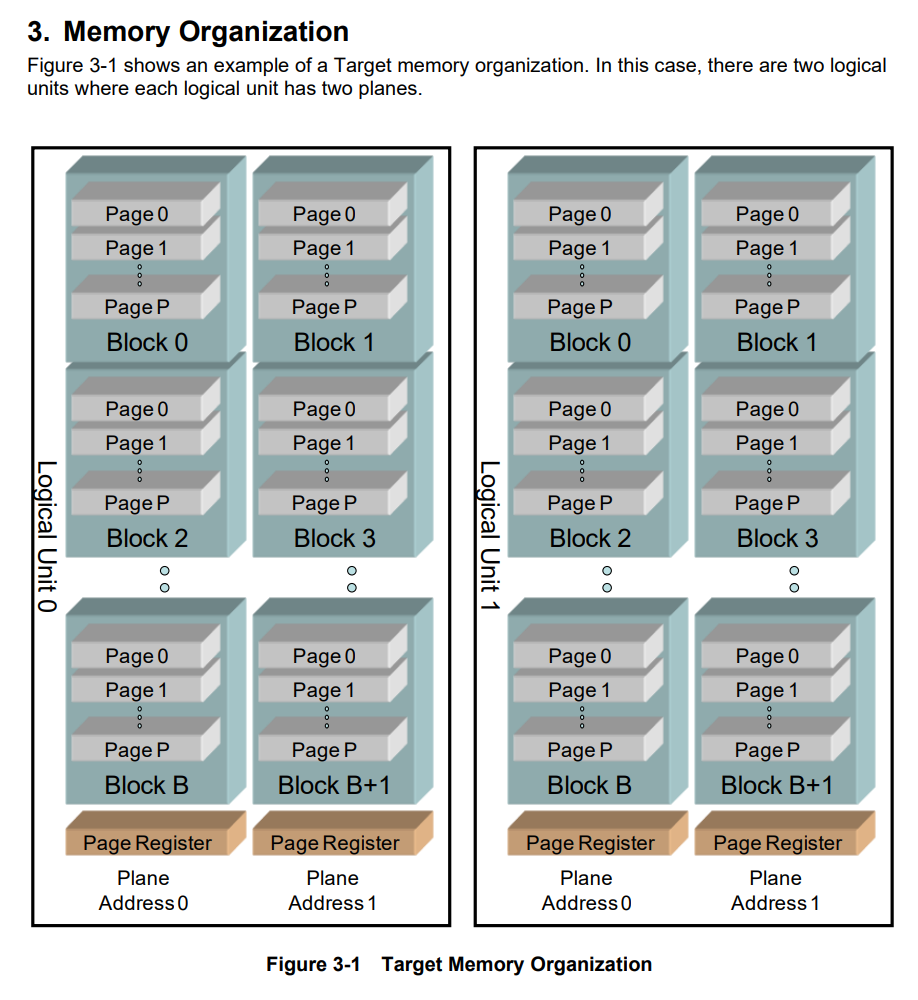

NAND flash is programmed in pages (typically 16kB) and erased in blocks (each block has thousands of pages). Before the SSD can write to a block, it has to be erased first, so if there is stale or invalid data mixed in with good data, the garbage collection (GC) algorithms have to move the valid data to a new erase block, and then erase the one with invalid data. Valid in this case just means that it is actual host data that the SSD has acknowledged and is tracking. This data movement, of valid data to another open block is what causes WAF, because these are writes internal to the SSD that the host doesn't know about.

How does overprovisioning (OP) help WAF?

Because of the limitation of NAND flash of having to erase data in blocks before writing a new page, the SSD always needs some spare area to perform these GC algorithms, sometimes called overprovisioning. OP is real capacity on the NAND flash that the user can't access, e.g. a 1.92TB SSD may have 2048GB or more of actual flash. The SSD will use any LBAs that haven't been written to as spare area, referred to as "deallocated", which is why the SSD performs much better when it is empty. TRIM is the way for the host to tell the SSD that it can deallocate or stop tracking the user data in the associated LBAs. More OP results in more physical NAND to wear level the writes over, but most importantly the improved GC efficiency (more free space to work with) results in lower WAF.

- 1 DWPD data center drives typically have around 7-10% OP, and a maximum WAF of 5-6 during a 4kB random write workload in steady-state

- 3 DWPD enterprise drives typically have around 25-30% OP, and a maximum WAF of 2-3 during a 4kB random write workload in steady-state (endurance is measured under JEDEC workload which should have similar WAF as 4kB random write)

What is an indirection unit (IU) and FTL?

Typical data center and enterprise SSDs will track LBAs in 4kB chunks in the FTL (Flash Translation Layer). The FTL is the map of which host LBAs map to the physical location on NAND. This granularity is called the indirection unit (IU). 4kB is typical for most SSDs but requires about 1GB of DRAM per 1TB of NAND. This clearly becomes a problem on really high-capacity SSDs like 32TB, 64TB where putting 32GB or 64GB of DRAM on a single SSD would be incredibly costly, so those types of drives, like QLC, move to something like a 16kB IU to reduce the DRAM requirements on the SSD by a factor of 4. This can cause write amplification now, though on any host write smaller than the size of the IU. On a drive with a 4k IU, extra WAF can be caused by unaligned host writes that cross the boundary or by a small write (e.g. each 512B write actually requires a full FTL update for 4kB)

| IU Size | Host Write Size | WAF |

|---|---|---|

| 4kB | 4kB, 8kB, 16kB, 32kB, 64kB, 128kB | 1 |

| 4kB | 512B | 4 |

| 16kB | 16kB, 32kB, 64kB, 128kB | 1 |

| 16kB | 4kB | 4 |

How to measure WAF

Remember, the formula for WAF is very easy: NAND writes over host writes. Unfortunately, NAND writes aren't part of the NVMe SMART standard due to concerns over reverse engineering early SSDs. Most vendors don't care today and have the data available through a vendor-specific log or the OCP C0 log page. Once you are able to grab NAND writes and host writes from nvme-cli, you need to be able to create a test to measure the difference between before and after the test. The delta of NAND writes and the delta of host writes will give you the workload-specific WAF, which also assumes the workload is run for adequate time for the WAF to reach a steady state.

Get the latest NVMe CLI

If you are on an older distro it will install an old version of NVMe-CLI, you will not have the ability to grab things like the OCP C0 log page! You need to grab the latest app image:

sudo apt install fuse

wget https://monom.org/linux-nvme/upload/AppImage/nvme-cli-latest-x86_64.AppImage

sudo chmod +x nvme-cli-latest-x86_64.AppImage

mv nvme-cli-latest-x86_64.AppImage /usr/local/bin/nvme

nvme -version

Output:

nvme version 2.7.1 (git 2.7.1)

libnvme version 1.7 (git 1.7)

You can measure WAF with NVMe-CLI. To get host writes:

sudo nvme smart-log /dev/nvme0n1

Output:

Data Units Written : 54706770 (28.01 TB)

If you look at the NVMe spec, the SMART data units written are in units of 512B * 1000. In my example:

54706770 * 512 * 1000 = 28,009,866,240,000 bytes, or 28.01 TB

To find NAND writes you will have two methods, the vendor unique log page or the OCP C0 log page. Example with a Solidigm (Intel will work on the older drives):

sudo nvme solidigm smart-log-add /dev/nvme0n1

Output:

0xf4 nand_bytes_written 100 1068937

0xf5 host_bytes_written 100 834759

Thankfully, these are in the same units, so you just need to divide the nand_bytes_written / host_bytes_written to get the WAF, but you can check with the regular SMART Data Units Written. Solidigm and Intel drives report these fields in 32MiB units.

Most newer drives will support the OCP Datacenter NVMe SSD Spec and the C0 log page:

sudo nvme ocp smart-add-log /dev/nvme0n1

Output:

Physical media units written - 0 35867584823296

Thankfully this is just in bytes, so converting to TB is as easy as Physical media units written / (1000^4)

Measuring over time

Measuring WAF over time requires logging the NAND and host writes, which is best done with observability tools like Prometheus and Grafana. I run my monitoring stack completely in Docker because the tools run much better in a cloud-native environment.

A good nvme-cli exporter and one with OCP C0 support didn't exist, so I started one! I'm not a developer, so please python folks or anyone who wants to rewrite in rust or go, please do so. I did this as a POC, but it does seem to work. If all the storage folks from OCP want to collaborate in one place I can volunteer to make some amazing dashboards to monitor real time and lifetime WAF, as well as all the other NVMe SMART and OCP C0 log page!

https://github.com/jmhands/nvme_exporter/tree/main

I'm looking forward to creating some really cool experiments with this to graph WAF over time during some random write workloads, but for now, I need to throw some smaller drives in this system so the tests don't take forever!