Getting Started with NVMe Over Fabrics with TCP

NVMe over Fabrics (NVMe-oF) is a compelling choice over traditional network storage protocols like NFS or SMB because you can leverage the raw performance of NVMe, including low latency overhead, scalability, low CPU overhead, and flexibility from on filesystems. For NVMe-oF with RDMA or Fibrechannel, you need specific networking gear, such as a NIC that supports NVMe-oF. For NVMe-oF/TCP all you need is any system running a modern Linux kernel. Exporting the NVMe drive via NVMe-oF has many benefits

- Simplifies Data Center Architecture: NVMe over TCP utilizes the ubiquitous TCP/IP network fabric, simplifying the integration and deployment process within existing data center infrastructures.

- Cost-Effective Performance Enhancement: By leveraging standard TCP/IP networks, NVMe over TCP avoids the need for specialized hardware, reducing deployment costs while still delivering high-performance storage access.

- Highly Scalable and Flexible: NVMe over TCP supports disaggregation and scaling across different zones and regions within the data center, enhancing flexibility in resource allocation and management.

- Raw Block Device: Offers a high-performance solution that is comparable to Direct Attached SSDs, making it ideal for modern, data-heavy applications requiring rapid access and minimal latency.

- Streamlined Software Stack: Features an optimized block storage network software stack that is specifically designed for efficient operation with NVMe, reducing complexity and enhancing reliability.

- Enhanced Parallel Data Access: Facilitates parallel access to storage, maximizing the capabilities of multi-core servers and ensuring optimal performance in multi-client environments.

- No Client-Side Modifications Needed: NVMe over TCP does not require any changes on the application server side, easing implementation and maintenance efforts.

Reminder: NVMe specs are completely open and free for anyone to view. You likely won’t ever need to open them if you are not a developer or engineer working on developing solutions, but it is great to know.

I’m using it here because I have some GPU systems that are completely out of NVMe slots, but need native access to NVMe. I could do this with trying to format the drives on the host system and export with NFS or SMB, but it would be much harder, have to deal with complicated permissions, and a much higher overhead. When I’m done, the raw block device on the host will be in perfect condition for me to remove the drive or remount it natively on the initiator system.

Method 1

run this script

#!/bin/bash

# Define variables for the IP address, NVMe device, and subsystem name

IP_ADDRESS="192.168.0.179"

NVME_DEVICE="/dev/nvme1n1"

SUBSYSTEM_NAME="testnqn"

PORT_NUMBER="4420"

TRTYPE="tcp"

ADRFAM="ipv4"

# Load necessary kernel modules

sudo modprobe nvme_tcp

sudo modprobe nvmet

sudo modprobe nvmet-tcp

# Create the NVMe subsystem directory

sudo mkdir -p /sys/kernel/config/nvmet/subsystems/$SUBSYSTEM_NAME

# Set the subsystem to accept any host

echo 1 | sudo tee /sys/kernel/config/nvmet/subsystems/$SUBSYSTEM_NAME/attr_allow_any_host

# Create and configure the namespace

sudo mkdir -p /sys/kernel/config/nvmet/subsystems/$SUBSYSTEM_NAME/namespaces/1

echo -n $NVME_DEVICE | sudo tee /sys/kernel/config/nvmet/subsystems/$SUBSYSTEM_NAME/namespaces/1/device_path

echo 1 | sudo tee /sys/kernel/config/nvmet/subsystems/$SUBSYSTEM_NAME/namespaces/1/enable

# Configure the NVMe-oF TCP port

sudo mkdir -p /sys/kernel/config/nvmet/ports/1

echo $IP_ADDRESS | sudo tee /sys/kernel/config/nvmet/ports/1/addr_traddr

echo $TRTYPE | sudo tee /sys/kernel/config/nvmet/ports/1/addr_trtype

echo $PORT_NUMBER | sudo tee /sys/kernel/config/nvmet/ports/1/addr_trsvcid

echo $ADRFAM | sudo tee /sys/kernel/config/nvmet/ports/1/addr_adrfam

# Link the subsystem to the port

sudo ln -s /sys/kernel/config/nvmet/subsystems/$SUBSYSTEM_NAME /sys/kernel/config/nvmet/ports/1/subsystems/$SUBSYSTEM_NAME

echo "NVMe over Fabrics configuration is set up."

sudo dmesg | grep nvmet_tcpNow to check to see if it was enabled

sudo dmesg | grep nvmet_tcp

[ 6420.232049] nvmet_tcp: enabling port 1 (192.168.0.179:4420)On the target system where you want to attach the drive

# on the target system

sudo apt install nvme-cli

sudo modprobe nvme_tcp

sudo nvme discover -t tcp -a 192.168.0.179 -s 4420

Discovery Log Number of Records 2, Generation counter 14

=====Discovery Log Entry 0======

trtype: tcp

adrfam: ipv4

subtype: current discovery subsystem

treq: not specified, sq flow control disable supported

portid: 1

trsvcid: 4420

subnqn: nqn.2014-08.org.nvmexpress.discovery

traddr: 192.168.0.179

eflags: none

sectype: none

=====Discovery Log Entry 1======

trtype: tcp

adrfam: ipv4

subtype: nvme subsystem

treq: not specified, sq flow control disable supported

portid: 1

trsvcid: 4420

subnqn: testnqn

traddr: 192.168.0.179

eflags: none

sectype: nonenow connect the drive

sudo nvme connect -t tcp -n testnqn -a 192.168.0.179 -s 4420

sudo nvme list

Node Generic SN Model Namespace Usage Format FW Rev

--------------------- --------------------- -------------------- ---------------------------------------- ---------- -------------------------- ---------------- --------

/dev/nvme1n1 /dev/ng1n1 d7c395e27e29d9e24f3c Linux 0x1 3.20 TB / 3.20 TB 512 B + 0 B 6.5.0-27perform a quick test on the new raw block device. Should be getting raw network speed

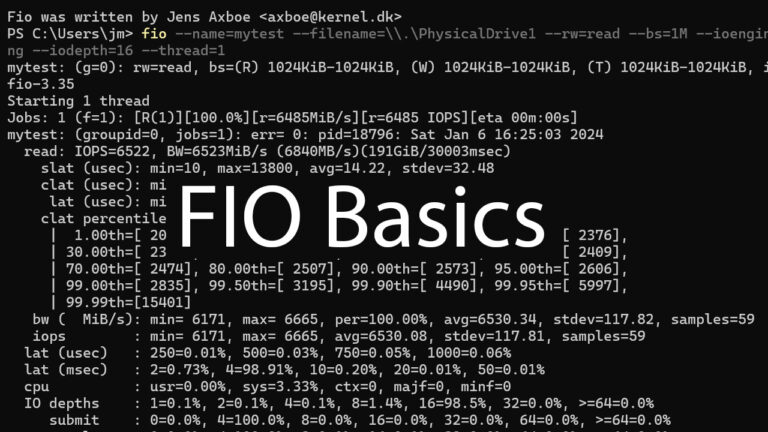

sudo fio --filename=/dev/nvme1n1 --rw=read --direct=1 --bs=128k --ioengine=io_uring --runtime=20 --numjobs=1 --time_based --group_reporting --name=seq_read --iodepth=16Method 2 – nvmetcli

grab the latest nvmetcli from here https://git.infradead.org/users/hch/nvmetcli.git

git clone git://git.infradead.org/users/hch/nvmetcli.git

cd nvmetcli/

sudo python3 setup.py installnow you can run the interactive shell with sudo nvmetcli

sudo nvmetcli

/> ls

o- / ......................................................................................................................... [...]

o- hosts ................................................................................................................... [...]

o- ports ................................................................................................................... [...]

| o- 1 .................................................. [trtype=tcp, traddr=192.168.0.179, trsvcid=4420, inline_data_size=16384]

| o- ana_groups .......................................................................................................... [...]

| | o- 1 ..................................................................................................... [state=optimized]

| o- referrals ........................................................................................................... [...]

| o- subsystems .......................................................................................................... [...]

| o- testnqn ........................................................................................................... [...]

o- subsystems .............................................................................................................. [...]

o- testnqn ............................................................. [version=1.3, allow_any=1, serial=d7c395e27e29d9e24f3c]

o- allowed_hosts ....................................................................................................... [...]

o- namespaces .......................................................................................................... [...]

o- 1 ...................................... [path=/dev/nvme1n1, uuid=73a182f9-b5f6-4c09-9e32-e371a55c4817, grpid=1, enabled]we can see our NVMe-oF subsystem setup from the last script. We can clear it by exiting the cli with exit and then running sudo nvmetcli clear. Now we can try setting up with nvmetcli

# Clear any existing configuration

sudo nvmetcli clear

sudo nvmetcli

# Navigate to subsystems and create a subsystem

cd /subsystems

create testnqn

# Configure the subsystem to allow any host

cd testnqn

set attr allow_any_host=1

# Create and configure the namespace

cd namespaces

create 1

cd 1

set device path=/dev/nvme1n1

enable

# Go back to the root and set up the port

cd /ports

create 1

cd 1

set addr trtype=tcp

set addr adrfam=ipv4

set addr traddr=192.168.0.179

set addr trsvcid=4420

# Link the subsystem to the port

cd subsystems

create testnqn

# Save the configuration

cd /

ls

/> ls

o- / ......................................................................................................................... [...]

o- hosts ................................................................................................................... [...]

o- ports ................................................................................................................... [...]

| o- 1 .................................................. [trtype=tcp, traddr=192.168.0.179, trsvcid=4420, inline_data_size=16384]

| o- ana_groups .......................................................................................................... [...]

| | o- 1 ..................................................................................................... [state=optimized]

| o- referrals ........................................................................................................... [...]

| o- subsystems .......................................................................................................... [...]

| o- testnqn ........................................................................................................... [...]

o- subsystems .............................................................................................................. [...]

o- testnqn ............................................................. [version=1.3, allow_any=1, serial=f58f8f13c0a10ed8062d]

o- allowed_hosts ....................................................................................................... [...]

o- namespaces .......................................................................................................... [...]

o- 1 ...................................... [path=/dev/nvme1n1, uuid=1e5dd23c-1103-46aa-b735-8a729e806555, grpid=1, enabled]

saveconfig /etc/nvmet/config.json

# on the target system

sudo apt install nvme-cli

sudo modprobe nvme_tcp

sudo nvme discover -t tcp -a 192.168.0.179 -s 4420

Discovery Log Number of Records 2, Generation counter 5

=====Discovery Log Entry 0======

trtype: tcp

adrfam: ipv4

subtype: current discovery subsystem

treq: not specified, sq flow control disable supported

portid: 1

trsvcid: 4420

subnqn: nqn.2014-08.org.nvmexpress.discovery

traddr: 192.168.0.179

eflags: none

sectype: none

=====Discovery Log Entry 1======

trtype: tcp

adrfam: ipv4

subtype: nvme subsystem

treq: not specified, sq flow control disable supported

portid: 1

trsvcid: 4420

subnqn: testnqn

traddr: 192.168.0.179

eflags: none

sectype: none

sudo nvme connect -t tcp -n testnqn -a 192.168.0.179 -s 4420

#to disconnect

sudo nvme disconnect-allIf you want the namespaces to be persistent

sudo cp nvmetcli/nvmet.service /etc/systemd/system/

sudo systemctl daemon-reload

sudo systemctl enable nvmet.service

Created symlink /etc/systemd/system/multi-user.target.wants/nvmet.service → /etc/systemd/system/nvmet.service.

sudo systemctl start nvmet.service

sudo systemctl status nvmet.service

● nvmet.service - Restore NVMe kernel target configuration

Loaded: loaded (/etc/systemd/system/nvmet.service; enabled; vendor preset: enabled)

Active: active (exited) since Sat 2024-05-04 17:28:48 UTC; 2s ago

Process: 2832 ExecStart=/usr/sbin/nvmetcli restore (code=exited, status=0/SUCCESS)

Main PID: 2832 (code=exited, status=0/SUCCESS)

CPU: 196ms

May 04 17:28:48 wolf35 systemd[1]: Starting Restore NVMe kernel target configuration...

May 04 17:28:48 wolf35 systemd[1]: Finished Restore NVMe kernel target configuration.