Storage Terminology – durability, endurance, quality, and reliability

I see a fair amount of confusion from folks in our industry getting some simple terms mixed up – durability, endurance, quality, and reliability.

Durability: The probability of data not being lost in a storage system. This is generally expressed in “nines”, where 99.99% or “four nines” durability means on an annual basis, the system can expect to lose 0.01% of the data. Things that affect storage system durability are data protection, like RAID, replications, and erasure coding. To calculate durability, you need to know the failure rate of the domain and the blast radius (how many devices fail when a larger component fails), the system rebuild time, and the number of data shards and parity shards in the system.

I made a simple durability calculator where the drive is the failure domain. Check it out here!

https://jmhands.github.io/durability/

Storage Durability Calculator

E.g. RAID 1 would be 1 data shard and 1 parity shard. The failure rate would be the AFR of the drive, typically 0.44% on enterprise storage. Rebuild time is measured, we can estimate something like 24 hours for a rebuild of a simple RAID 1 of HDDs.

I prefer durability over MTTDL, one – because it is much simpler and easier to understand, you don’t need to know the drive size and bandwidth in the calculation, only indirectly for the rebuild time. This is why all the cloud guys use durability. Some MTTDL calculators out there say you have total data loss if you get one uncorrectable read error during a RAID rebuild, which is not the case with modern hardware and software data protection solutions. On large arrays with hard drives that have an UBER of 10^-15, this is certainly an issue where no other data shards remain.

Quality: Quality and reliability are different metrics. Quality is the goal of reducing time-zero failures. Some lower-end drive models are not tested as robustly as high-end drive models in the factory prior to shipping, and they may have a higher chance of being DOA (dead on arrival). Quality issues on storage devices can generally be addressed with burn-in testing, or weeding out infant mortality at the factory prior to shipping to the customer. A poor quality rate would increase the number of returns a vendor would get. Some compatibility issues could get lumped into a quality issue if the vendor didn’t qualify the device on a specific platform and they get returned because of system incompatibility.

Reliability: reliability issues are caused by extended use of the device. Enterprise SSDs and HDDs are rated at 2M or 2.5M hours MTBF (Mean Time Between Failure), which equates to 0.44% and 0.35% AFR (Annual Failure Rate), respectively. MTBF and AFR are the same metrics expressed in different ways. Mean Time Between Failure is the total amount of drive hours before a failure is expected. This does not mean a single drive can last for 2 million hours; it means if you have a collection of drives running, you should expect one failure every 2M total drive power-on hours (the sum of all the drives). Annual Failure Rate expresses that the individual drive has a 0.44% chance every year to fail, based on the data from a large population of drives. Vendors run a reliability demonstration test to determine the failure rate of a specific device and firmware combination.

I wrote a blog on how hard drives fail on Chia a while back

And I did a series of presentations on how SSDs fail, and some blog posts on NVM Express. I should refresh these because of all the new features in NVMe!

https://nvmexpress.org/how-ssds-fail-nvme-ssd-management-error-reporting-and-logging-capabilities/

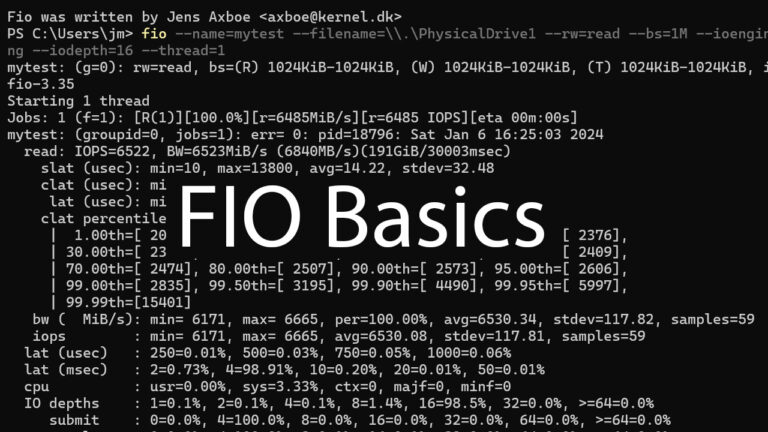

Endurance: SSDs have finite endurance, or the amount of data you can write to the SSD before the device wears out and can no longer store data safely. SSD vendors specify this in two ways: TBW (terabytes written) or DWPD (drive writes per day) which is an easy metric of how much you can write to the device every day of the warranty period. JEDEC defines failure of the SSD in this case to reliability store data, either the drive has a higher than rated error rate, or the retention (ability to store data while powered off) at a specific time and temperature cannot be met. Many things affect SSD endurance, but understanding WAF is the MOST important thing one can do to learn about SSD endurance…but this is a topic for a future post.